Program evaluation is an essential aspect of healthcare, used to assess the effectiveness and efficiency of initiatives, policies, and interventions in hospitals and other healthcare settings. It involves collecting and analyzing data to evaluate the impact, quality, and cost-effectiveness of programs, with the goal of improving patient outcomes and justifying continued funding. Various evaluation designs, such as experimental, quasi-experimental, and nonexperimental approaches, are employed to determine how programs affect participants' behaviours, knowledge, and healthcare outcomes. Utilization measures, including hospital admissions, emergency room visits, and prescription drug use, are often used to evaluate the success of programs, while also considering financial outcomes and return on investment. Health professionals play a crucial role in program evaluation, requiring specific knowledge and skills to assess the merit, worth, and significance of the programs they deliver.

| Characteristics | Values |

|---|---|

| Purpose | To determine how effective and efficient programs are in reaching their outcomes |

| Data Collection | Collecting data on patient characteristics, services provided, clinical indicators, utilization rates, costs, and revenues |

| Analysis | Analyzing data to identify trends, patterns, and potential areas of improvement |

| Evaluation Design | Experimental, Quasi-experimental, Nonexperimental |

| Evaluation Framework | Formative, Process, Outcome |

| Evaluation Type | Positive Outcome, Impact, Cost-Benefit, Economic |

| Stakeholder Interest | Financial outcomes, return on investment, utilization statistics |

| Limitations | Selection bias, high-cost events in medical care that may not be recurring |

| Skills Required | Knowledge, attributes, and skills to evaluate the impact of services provided |

What You'll Learn

Patient self-management support programs

There are several models for delivering patient self-management support, including the primary care model, external on-the-ground model, and external call-center model. The primary care model provides self-management support directly through local providers' offices, often including face-to-face interactions. The external on-the-ground model brings support to the patient, whether that be in homes, primary care offices, or community settings, and is often more intensive. The external call-center model relies on telephone or internet interactions and may be initiated by the patient through a hotline or website. These models can be combined with other strategies, such as the use of non-physician providers, group settings, and peer support, to promote self-management.

When evaluating patient self-management support programs, it is essential to consider the impact on participants' behaviours, knowledge, and healthcare outcomes. This can be achieved through evaluation designs such as experimental, quasi-experimental, and nonexperimental approaches. Experimental designs involve randomly assigning participants to a control or treatment group, while quasi-experimental designs compare the treatment group to a similar non-program group. Nonexperimental designs lack a control group, making it challenging to determine the program's impact.

Additionally, stakeholders and interviewees often focus on financial outcomes and return on investment. Utilization statistics, such as emergency room visits, hospital admissions, and prescription drug use, are essential data points for evaluating cost savings and justifying continued funding for the program. However, it is important to address potential biases in evaluation, such as selection bias and the impact of high-cost baseline periods, to accurately assess the effectiveness of patient self-management support programs.

Overall, patient self-management support programs aim to improve health outcomes and reduce healthcare costs by empowering patients to manage their health conditions confidently and proactively. By utilising different delivery models and evaluating the programs through various methods, healthcare providers can continuously improve the quality and effectiveness of these programs.

Hospital Navigation: Door Markings in English Hospitals

You may want to see also

Impact evaluation

Data Collection and Measurement

Comparison Groups

To establish the true impact of a hospital program, it is essential to compare the results of the intervention group with those of a control or comparison group. This can be achieved through experimental or quasi-experimental design. In an experimental design, participants are randomly assigned to the control or treatment group, offering a more accurate assessment of the program's effects. Quasi-experimental designs, on the other hand, compare the treatment group to a similar non-program group without randomisation.

Long-term and Short-term Impact

Cost-benefit Analysis

Economic evaluation is an integral part of assessing a hospital program's impact. It involves analyzing the costs of implementing the program relative to the benefits achieved. Common approaches include cost analysis, cost-benefit analysis, and cost-utility analysis. This information is crucial for stakeholders and funders, helping them understand the return on investment and justifying the allocation of resources for continued or expanded program implementation.

Continuous Improvement

Hospitals' Creative Solutions to Staffing Shortages

You may want to see also

Cost-benefit analysis

CBA is particularly useful when a budget has not been allocated, as it allows for a more transparent comparison of costs and effects than a cost-effectiveness analysis (CEA). This is because CBA measures both costs and effects in monetary terms, allowing for explicit comparisons. For example, a CBA may consider the financial benefits of a program, such as cost savings from reduced hospital admissions, as well as non-financial benefits, such as improved patient health outcomes or quality of life.

In the context of hospitals, CBA can be used to evaluate the implementation of digital health initiatives, such as electronic medical records (EMR), or telehealth programs. For instance, a CBA of the Emergency Telehealth and Navigation (ETHAN) program in Houston, USA, found that it was less costly and provided more benefits than the traditional model of referring non-emergency cases to emergency departments.

CBA can also be applied to specific health conditions or interventions. For example, CBA has been used to evaluate interventions for reducing dementia symptoms, including years of education, Medicare eligibility, hearing aids, and vision correction.

Overall, CBA provides valuable insights for decision-makers in healthcare by helping to identify programs or projects that offer the highest returns in terms of patient care, operational efficiency, and long-term sustainability.

Funding Sources of Private Hospitals in Australia

You may want to see also

Utilization measures

One example of a utilization management (UM) program is the evaluation of acute care bed utilization in hospitals. The objective of such a program is to reduce the inappropriate use of acute care beds while maintaining quality patient care. This involves analyzing admission criteria, length of stay, and readmission rates, ensuring that hospital beds are utilized efficiently and effectively.

In the context of patient self-management support programs, utilization statistics such as emergency room visits, hospital admissions, hospital days, length of stay, neonatal intensive care unit days, readmissions, and prescription drug use are often reported. These data points provide insights into the financial outcomes of the program, allowing stakeholders to assess return on investment and project savings. However, it is important to consider the potential impact of selection bias, as participants in self-management support programs may have greater motivation to take care of their health, leading to overstated effects of the intervention.

Additionally, utilization measures can be assessed from both the patient's and the physician's perspective. From the patient's perspective, it involves analyzing patient-reported services, which can be subjective. In contrast, the physician's perspective focuses on the volume of medical services offered, including the number of hospitalizations per year, medical acts, patients, and visits. These indicators help evaluate the accessibility, continuity, comprehensiveness, and productivity of care.

The Impact of Straw Bans on Hospitals

You may want to see also

Experimental design

There are two primary types of rigorous experimental designs used in implementation and quality improvement evaluations: parallel cluster-randomized and cluster-randomized stepped wedge designs. These designs are referred to as cluster- or group-randomized controlled trials, where the unit of randomization is a cluster or group. Outcome measures are obtained from members of the cluster or group, and these designs randomize centers (hospitals, community sites) or units (clinician groups, departments) rather than individuals to avoid contamination that might occur with individual-level randomization.

When conducting an experimental design, it is important to structure the evaluation to ensure reliable results. This includes obtaining multiple baseline observations, observing and documenting quantitative and qualitative data carefully, and including intervention and follow-up observations to assess the maintenance of effects. Single group interrupted time series designs are often workable for small organizations and can provide reliable evaluations when structured well.

Overall, experimental design is a valuable tool for rigorously assessing the implementation of effective practices and comparing different implementation strategies in healthcare settings. By randomly assigning participants to control and treatment groups, researchers can minimize bias and gain insights into the causal relationships between interventions and outcomes.

Community Haven: New Bern's Healthcare Sanctuary

You may want to see also

Frequently asked questions

Program evaluation helps to determine how effective and efficient programs are in reaching their outcomes. It is a way to identify areas of improvement and build upon strengths. It also helps to secure funding for the program.

There are three main evaluation designs: experimental, quasi-experimental, and non-experimental. The experimental design is considered the most effective and accurate as it involves randomly assigning participants to a control or treatment group. The quasi-experimental design compares the treatment group to a similar group that is not involved in the program. The non-experimental design has no control group.

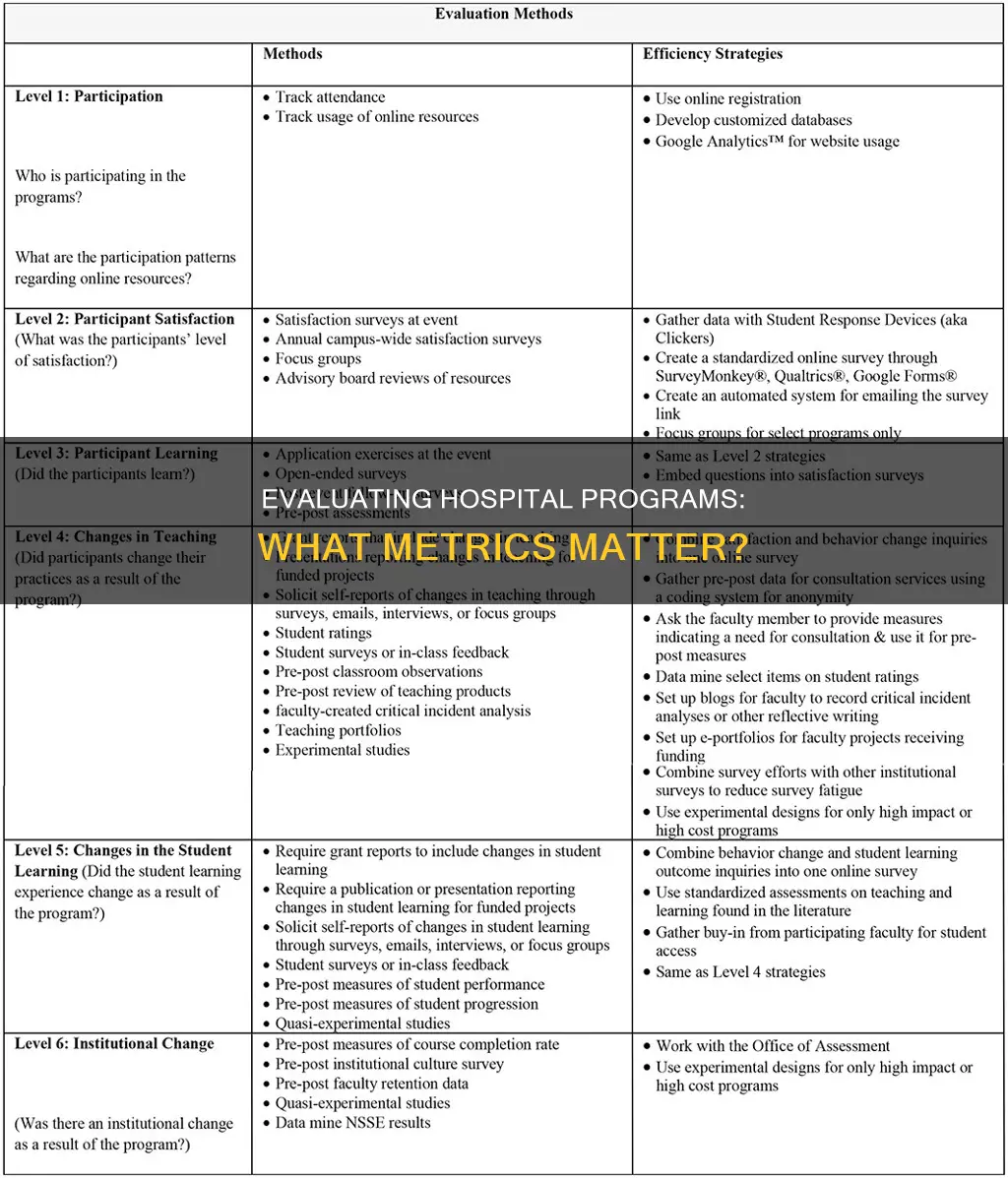

There are several types of evaluations, including formative evaluation, process evaluation, outcome evaluation, impact evaluation, and economic evaluation. Formative evaluation occurs during program development and implementation, while process evaluation assesses the type, quantity, and quality of program activities or services. Outcome evaluation focuses on short- and long-term program objectives, and impact evaluation assesses the program's effect on participants. Economic evaluation examines the programmatic effects relative to program costs.